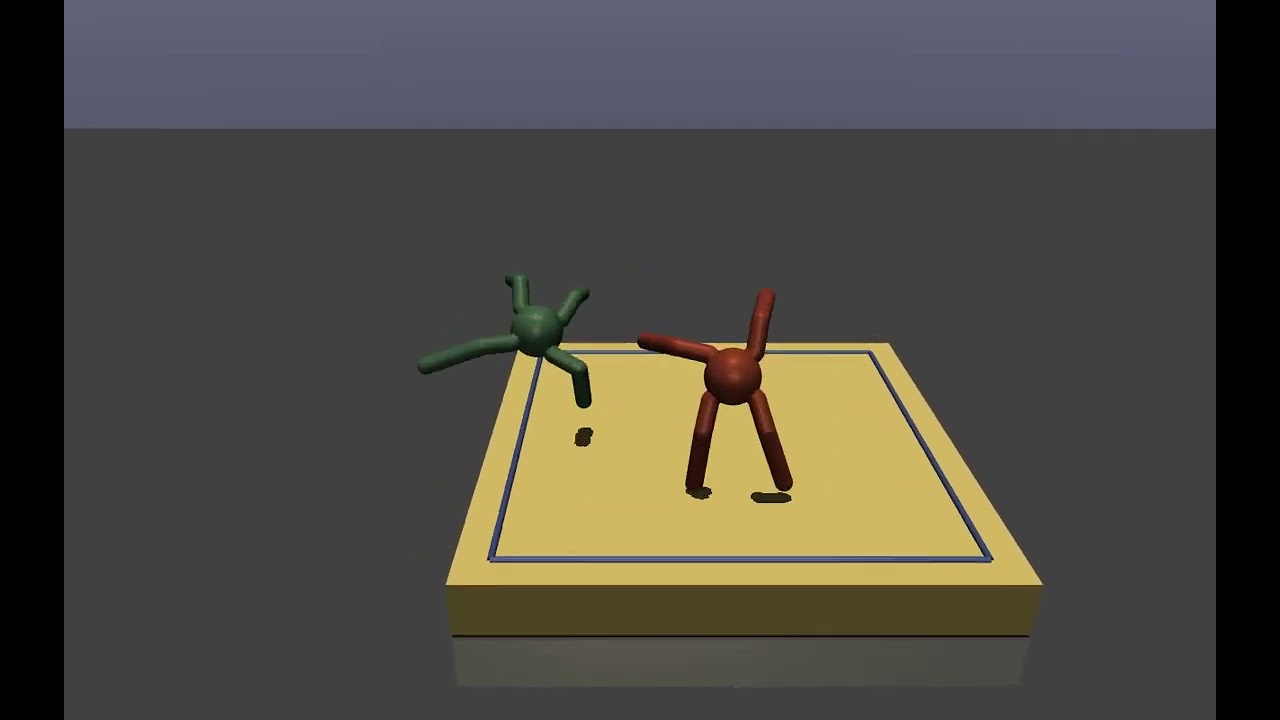

Image 2 groups squaring off on a football field. The gamers can comply to attain a goal, and contend versus other gamers with clashing interests. That’s how the video game works.

Developing expert system representatives that can find out to contend and comply as efficiently as human beings stays a tough issue. An essential difficulty is making it possible for AI representatives to prepare for future habits of other representatives when they are all discovering at the same time.

Since of the intricacy of this issue, existing techniques tend to be myopic; the representatives can just think the next couple of relocations of their colleagues or rivals, which results in bad efficiency in the long run.

Scientists from MIT, the MIT-IBM Watson AI Laboratory, and in other places have actually established a brand-new method that provides AI representatives a farsighted point of view. Their machine-learning structure makes it possible for cooperative or competitive AI representatives to consider what other representatives will do as time techniques infinity, not simply over a couple of next actions. The representatives then adjust their habits appropriately to affect other representatives’ future habits and get to an ideal, long-lasting service.

This structure might be utilized by a group of self-governing drones interacting to discover a lost hiker in a thick forest, or by self-driving automobiles that make every effort to keep travelers safe by preparing for future relocations of other automobiles driving on a hectic highway.

” When AI representatives are complying or contending, what matters most is when their habits assemble at some time in the future. There are a great deal of short-term habits along the method that do not matter quite in the long run. Reaching this converged habits is what we truly appreciate, and we now have a mathematical method to allow that,” states Dong-Ki Kim, a college student in the MIT Lab for Info and Choice Systems (LIDS) and lead author of a paper explaining this structure.

The senior author is Jonathan P. How, the Richard C. Maclaurin Teacher of Aeronautics and Astronautics and a member of the MIT-IBM Watson AI Laboratory. Co-authors consist of others at the MIT-IBM Watson AI Laboratory, IBM Research Study, Mila-Quebec Expert System Institute, and Oxford University. The research study will exist at the Conference on Neural Info Processing Systems.

In this demonstration video, the red robotic, which has actually been trained utilizing the scientists’ machine-learning system, has the ability to beat the green robotic by discovering more efficient habits that make the most of the continuously altering method of its challenger.

More representatives, more issues

The scientists concentrated on an issue referred to as multiagent support knowing. Support knowing is a kind of artificial intelligence in which an AI representative discovers by experimentation. Scientists offer the representative a benefit for “great” habits that assist it attain an objective. The representative adjusts its habits to take full advantage of that benefit up until it ultimately ends up being a specialist at a job.

However when numerous cooperative or contending representatives are at the same time discovering, things end up being significantly complicated. As representatives think about more future actions of their fellow representatives, and how their own habits affects others, the issue quickly needs far excessive computational power to resolve effectively. This is why other techniques just concentrate on the short-term.

” The AIs truly wish to think of completion of the video game, however they do not understand when the video game will end. They require to think of how to keep adjusting their habits into infinity so they can win at some far time in the future. Our paper basically proposes a brand-new goal that makes it possible for an AI to think of infinity,” states Kim.

However because it is difficult to plug infinity into an algorithm, the scientists developed their system so representatives concentrate on a future point where their habits will assemble with that of other representatives, referred to as balance. A balance point figures out the long-lasting efficiency of representatives, and several stabilities can exist in a multiagent situation. For that reason, an efficient representative actively affects the future habits of other representatives in such a method that they reach a preferable balance from the representative’s point of view. If all representatives affect each other, they assemble to a basic principle that the scientists call an “active balance.”

The machine-learning structure they established, referred to as FURTHER (which represents Completely Enhancing acTive impact witH averagE Reward), makes it possible for representatives to find out how to adjust their habits as they connect with other representatives to attain this active balance.

FURTHER does this utilizing 2 machine-learning modules. The very first, a reasoning module, makes it possible for a representative to think the future habits of other representatives and the discovering algorithms they utilize, based entirely on their previous actions.

This details is fed into the support discovering module, which the representative utilizes to adjust its habits and impact other representatives in such a way that optimizes its benefit.

” The difficulty was considering infinity. We needed to utilize a great deal of various mathematical tools to allow that, and make some presumptions to get it to operate in practice,” Kim states.

Winning in the long run

They evaluated their method versus other multiagent support discovering structures in numerous various situations, consisting of a set of robotics battling sumo-style and a fight pitting 2 25-agent groups versus one another. In both circumstances, the AI representatives utilizing FURTHER won the video games regularly.

Because their method is decentralized, which implies the representatives find out to win the video games separately, it is likewise more scalable than other techniques that need a main computer system to manage the representatives, Kim discusses.

The scientists utilized video games to evaluate their method, however FURTHER might be utilized to deal with any sort of multiagent issue. For example, it might be used by financial experts looking for to establish sound policy in scenarios where numerous communicating entitles have habits and interests that alter with time.

Economics is one application Kim is especially delighted about studying. He likewise wishes to dig much deeper into the principle of an active balance and continue boosting the FURTHER structure.

This research study is moneyed, in part, by the MIT-IBM Watson AI Laboratory.