The world is mesmerized by expert system (AI), especially by current advances in natural language processing (NLP) and generative AI– and for great factor. These development innovations have the prospective to boost daily efficiency throughout jobs of all kinds. For instance, GitHub Copilot assists designers quickly code whole algorithms, OtterPilot instantly creates conference notes for executives, and Mixo permits business owners to quickly release sites.

This post will offer a quick summary of generative AI, consisting of appropriate AI innovation examples, then put theory into action with a generative AI tutorial in which we’ll develop creative makings utilizing GPT and diffusion designs.

Quick Summary of Generative AI

Note: Those acquainted with the technical ideas behind generative AI might avoid this area and continue to the tutorial.

In 2022, lots of structure design applications concerned the marketplace, speeding up AI advances throughout lots of sectors. We can much better specify a structure design after comprehending a couple of crucial ideas:

- Expert system is a generic term explaining any software application that can wisely pursue a particular job.

- Artificial intelligence is a subset of expert system that utilizes algorithms that gain from information.

- A neural network is a subset of artificial intelligence that utilizes layered nodes imitated the human brain.

- A deep neural network is a neural network with lots of layers and discovering criteria.

A structure design is a deep neural network trained on big quantities of raw information. In more useful terms, a structure design is an extremely effective kind of AI that can quickly adjust and achieve numerous jobs. Structure designs are at the core of generative AI: Both text-generating language designs like GPT and image-generating diffusion designs are structure designs.

Text: NLP Designs

In generative AI, natural language processing (NLP) designs are trained to produce text that checks out as though it were made up by a human. In specific, big language designs (LLMs) are particularly appropriate to today’s AI systems. LLMs, categorized by their usage of huge quantities of information, can acknowledge and produce text and other material.

In practice, these designs might act as composing– or perhaps coding– assistants. Natural language processing applications consist of reiterating complicated ideas merely, equating text, preparing legal files, and even developing exercise strategies (though such usages have specific constraints).

Lex is one example of an NLP composing tool with lots of functions: proposing titles, finishing sentences, and making up whole paragraphs on a provided subject. The most immediately identifiable LLM of the minute is GPT. Established by OpenAI, GPT can react to nearly any concern or command immediately with high precision. OpenAI’s numerous designs are offered through a single API Unlike Lex, GPT can deal with code, programs options to practical requirements and determining in-code problems to make designers’ lives especially much easier.

Images: AI Diffusion Designs

A diffusion design is a deep neural network that holds hidden variables efficient in discovering the structure of a provided image by eliminating its blur (i.e., sound). After a design’s network is trained to “understand” the principle abstraction behind an image, it can develop brand-new variations of that image. For instance, by getting rid of the sound from a picture of a feline, the diffusion design “sees” a tidy picture of the feline, finds out how the feline looks, and uses this understanding to develop brand-new feline image variations.

Diffusion designs can be utilized to denoise or hone images (enhancing and fine-tuning them), control facial expressions, or produce face-aging images to recommend how an individual may pertain to examine time. You might search the Lexica online search engine to witness these AI designs’ powers when it concerns producing brand-new images.

Tutorial: Diffusion Design and GPT Execution

To show how to execute and utilize these innovations, let’s practice producing anime-style images utilizing a HuggingFace diffusion design and GPT, neither of which need any complicated facilities or software application. We will start with a ready-to-use design (i.e., one that’s currently developed and pre-trained) that we will just require to tweak.

Note: This post discusses how to utilize generative AI images and language designs to develop top quality pictures of yourself in intriguing designs. The details in this post ought to not be (mis) utilized to develop deepfakes in offense of Google Colaboratory’s regards to usage

Setup and Picture Requirements

To get ready for this tutorial, register at:

You’ll likewise require 20 pictures of yourself– or perhaps more for enhanced efficiency– saved money on the gadget you prepare to utilize for this tutorial. For finest outcomes, pictures ought to:

- Be no smaller sized than 512 x 512 px.

- Be of you and just you.

- Have the very same extension format.

- Be drawn from a range of angles.

- Consist of 3 to 5 full-body shots and 2 to 3 midbody chance ats a minimum; the rest needs to be facial pictures.

That stated, the pictures do not require to be best– it can even be instructional to see how wandering off from these requirements impacts the output.

AI Image Generation With the HuggingFace Diffusion Design

To start, open this tutorial’s buddy Google Colab note pad, which includes the needed code.

- Run cell 1 to link Colab with your Google Drive to keep the design and conserve its produced images in the future.

- Run cell 2 to set up the required reliances.

- Run cell 3 to download the HuggingFace design.

- In cell 4, type “How I Look” in the

Session_Namefield, and after that run the cell. Session name normally recognizes the principle that the design will find out. - Run cell 5 and publish your pictures.

- Go to cell 6 to train the design. By examining the

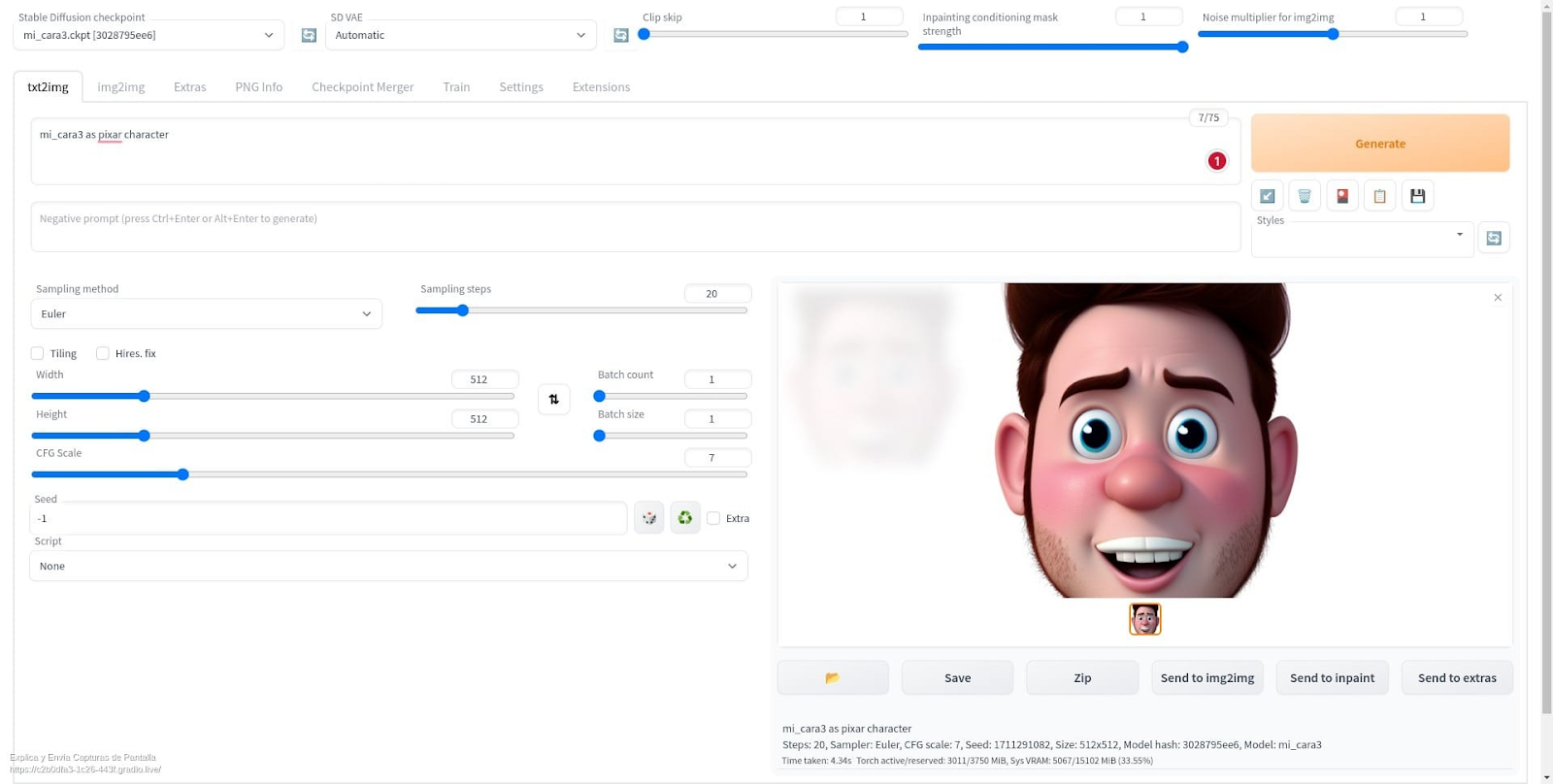

Resume_Trainingchoice prior to running the cell, you can re-train it lot of times. (This action might take around an hour to finish.) - Lastly, run cell 7 to check your design and see it in action. The system will output an URL where you will discover a user interface to produce your images. After going into a timely, push the Create button to render images.

With a working design, we can now explore numerous triggers producing various visual designs (e.g., “me as an animated character” or “me as an impressionist painting”). Nevertheless, utilizing GPT for character triggers is ideal, as it yields included information when compared to user-generated triggers, and makes the most of the capacity of our design.

Efficient Diffusion Design Triggers With GPT

We’ll include GPT to our pipeline by means of OpenAI, though Cohere and the other choices use comparable performance for our functions. To start, sign up on the OpenAI platform and develop your API secret Now, in the Colab note pad’s “Getting great triggers” area, set up the OpenAI library:

pip set up openai

Next, load the library and set your API secret:

import openai

openai.api _ secret="YOUR_API_KEY".

We will produce enhanced triggers from GPT to produce our image in the design of an anime character, changing YOUR_SESSION_NAME with “How I Look,” the session name embeded in cell 4 of the note pad:

ASKING_TO_GPT='Compose a timely to feed a diffusion design to produce gorgeous images '.

' of YOUR_SESSION_NAME styled as an anime character.'.

reaction = openai.Completion.create( design=" text-davinci-003", timely= ASKING_TO_GPT,.

temperature level= 0, max_tokens= 1000).

print( reaction["choices"][0] text).

The temperature level specification varies in between 0 and 2, and it figures out whether the design needs to strictly follow the information it trained on (worths near to 0), or be more imaginative with its outputs (worths near to 2). The max_tokens specification sets the quantity of text to be returned, with 4 tokens being comparable to around one English word.

In my case, the GPT design output checks out:

" Juan is styled as an anime character, with big, meaningful eyes and a little, fragile mouth.

His hair is surged up and back, and he uses an easy, yet trendy, attire. He is the best.

example of a hero, and he constantly handles to look his finest, no matter the circumstance.".

Lastly, by feeding this text as input into the diffusion design, we accomplish our last output:

Getting GPT to compose diffusion design triggers suggests that you do not need to believe in information about the subtleties of what an anime character appears like– GPT will produce a proper description for you. You can constantly fine-tune the timely more according to taste. With this tutorial finished, you can develop complicated imaginative pictures of yourself or any principle you desire.

The Benefits of AI Are Within Your Reach

GPT and diffusion designs are 2 vital contemporary AI applications. We have actually seen how to use them in seclusion and increase their power by combining them, utilizing GPT output as diffusion design input. In doing so, we have actually developed a pipeline of 2 big language designs efficient in optimizing their own use.

These AI innovations will affect our lives exceptionally. Numerous anticipate that big language designs will significantly impact the labor market throughout a varied series of professions, automating specific jobs and improving existing functions. While we can’t anticipate the future, it is unassailable that the early adopters who take advantage of NLP and generative AI to enhance their work will have an upper hand on those who do not.

The editorial group of the Toptal Engineering Blog site extends its appreciation to Federico Albanese for evaluating the code samples and other technical material provided in this post.