Intro

Let’s get this out of the method at the start: comprehending efficient streaming information architectures is hard, and comprehending how to utilize streaming information for analytics is actually difficult. Kafka or Kinesis? Stream processing or an OLAP database? Open source or completely handled? This blog site series will assist debunk streaming information, and more particularly, supply engineering leaders a guide for including streaming information into their analytics pipelines.

Here is what the series will cover:

- This post will cover the essentials: streaming information formats, platforms, and utilize cases

- Part 2 will describe essential distinctions in between stream processing and real-time analytics

- Part 3 will use suggestions for operationalizing streaming information, consisting of a couple of sample architectures

- Part 4 will include a case research study highlighting an effective application of real-time analytics on streaming information

If you wish to avoid around this post, make the most of our tabulation (to the left of the text).

What Is Streaming Data?

We’re going to begin with a fundamental concern: what is streaming information? It’s a constant and unbounded stream of info that is created at a high frequency and provided to a system or application. An instructional example is clickstream information, which tape-records a user’s interactions on a site. Another example would be sensing unit information gathered in a commercial setting. The typical thread throughout these examples is that a big quantity of information is being created in genuine time.

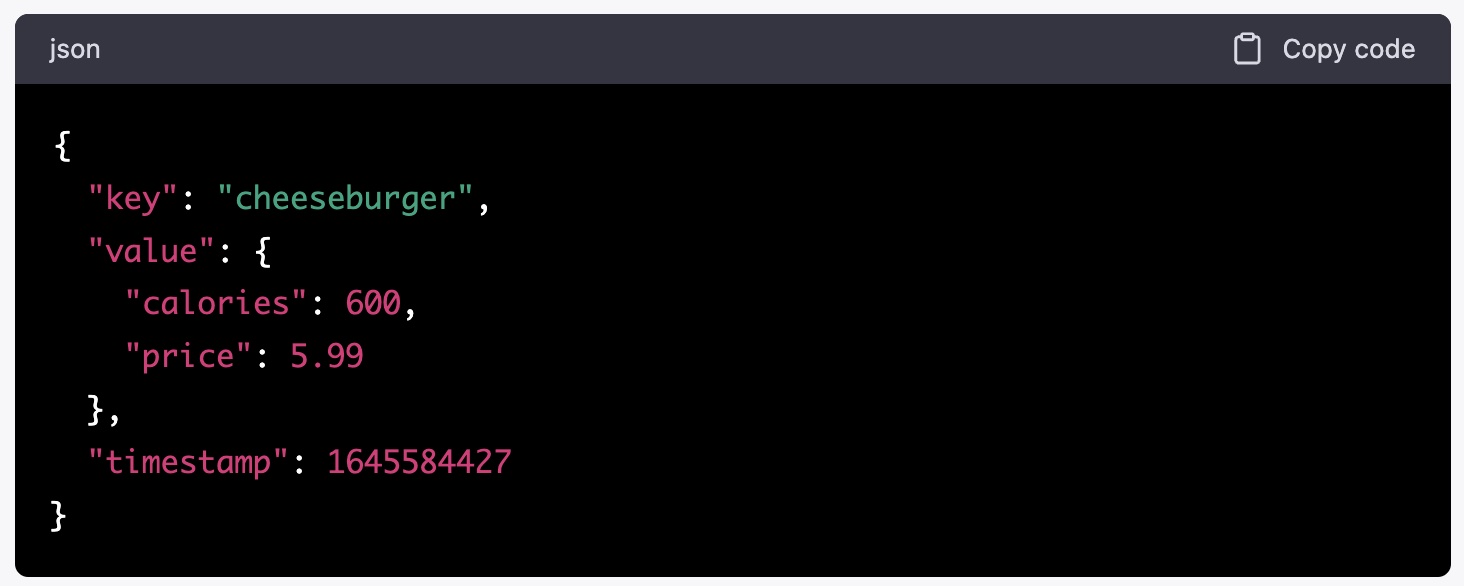

Normally, the “systems” of information being streamed are thought about occasions, which look like a record in a database, with some essential distinctions. Initially, occasion information is disorganized or semi-structured and saved in an embedded format like JSON or AVRO. Occasions generally consist of a secret, a worth (which can have extra embedded aspects), and a timestamp. Second, occasions are generally immutable (this will be a really crucial function in this series!). Third, occasions by themselves are not perfect for comprehending the existing state of a system. Occasion streams are fantastic at upgrading systems with info like “A cheeseburger was offered” however are less ideal out of package to respond to “the number of cheeseburgers were offered today”. Last but not least, and maybe most notably, streaming information is special due to the fact that it’s high-velocity and high volume, with an expectation that the information is readily available to be utilized in the database really rapidly after the occasion has actually taken place.

Streaming information has actually been around for years. It got traction in the early 1990s as telecommunication business utilized it to handle the circulation of voice and information traffic over their networks. Today, streaming information is all over. It has actually broadened to numerous markets and applications, consisting of IoT sensing unit information, monetary information, web analytics, video gaming behavioral information, and a lot more usage cases. This kind of information has actually ended up being an important element of real-time analytics applications due to the fact that responding to occasions rapidly can have significant results on a company’ earnings. Real-time analytics on streaming information can assist companies identify patterns and abnormalities, determine earnings chances, and react to altering conditions, all near quickly. Nevertheless, streaming information presents a distinct difficulty for analytics due to the fact that it needs specialized innovations and methods to accomplish. This series will stroll you through alternatives for operationalizing streaming information, however we’re going to begin with the essentials, consisting of formats, platforms, and utilize cases.

Streaming Data Formats

There are a couple of really typical general-purpose streaming information formats. They are essential to study and comprehend due to the fact that each format has a couple of attributes that make it much better or even worse for specific usage cases. We’ll highlight these briefly and after that carry on to streaming platforms.

JSON (JavaScript Things Notation)

This is a light-weight, text-based format that is simple to check out (generally), making it a popular option for information exchange. Here are a couple of attributes of JSON:

- Readability: JSON is human-readable and simple to comprehend, making it much easier to debug and repair.

- Wide assistance: JSON is commonly supported by lots of shows languages and structures, making it an excellent option for interoperability in between various systems.

- Versatile schema: JSON enables versatile schema style, which works for dealing with information that might alter with time.

Sample usage case: JSON is an excellent option for APIs or other user interfaces that require to manage varied information types. For instance, an e-commerce site might utilize JSON to exchange information in between its site frontend and backend server, along with with third-party suppliers that supply shipping or payment services.

Example message:

Avro

Avro is a compact binary format that is developed for effective serialization and deserialization of information. You can likewise format Avro messages in JSON. Here are a couple of attributes of Avro:

- Effective: Avro’s compact binary format can enhance efficiency and minimize network bandwidth use.

- Strong schema assistance: Avro has a distinct schema that enables type security and strong information recognition.

- Dynamic schema advancement: Avro’s schema can be upgraded without needing a modification to the customer code.

Sample usage case: Avro is an excellent option for huge information platforms that require to process and examine big volumes of log information. Avro works for keeping and transferring that information effectively and has strong schema assistance.

Example message:

x16cheeseburgerx02xdcx07x9ax99x19x41x12xcdxccx0cx40xcexfax8excax1f.

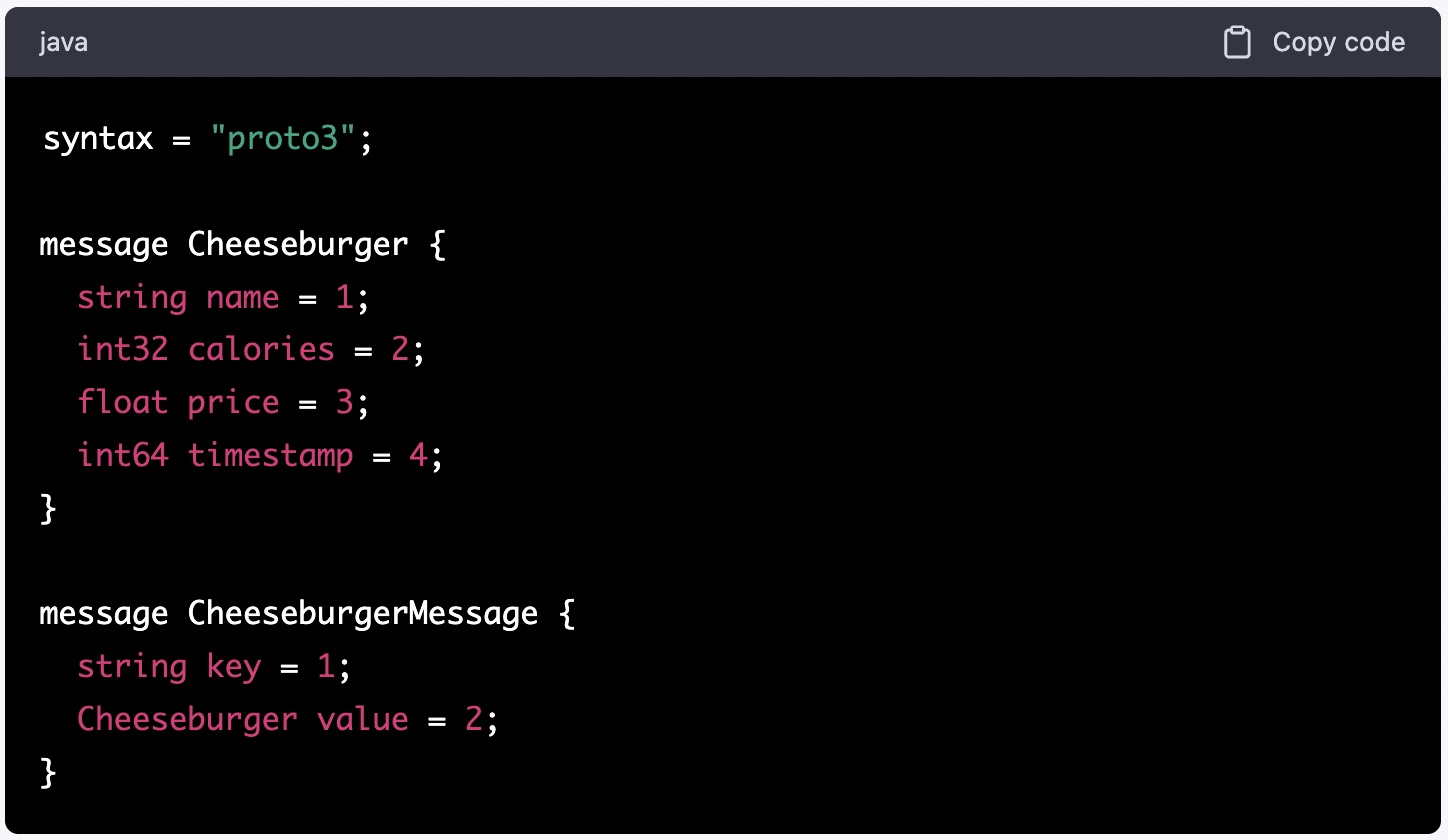

Procedure buffers (generally called protobuf)

Protobuf is a compact binary format that, like Avro, is developed for effective serialization and deserialization of structured information. Some attributes of protobuf consist of:

- Compact: protobuf is developed to be more compact than other serialization formats, which can even more enhance efficiency and minimize network bandwidth use.

- Strong typing: protobuf has a distinct schema that supports strong typing and information recognition.

- Backwards and forward compatibility: protobuf supports backwards and forward compatibility, which indicates that a modification to the schema will not break current code that utilizes the information.

Sample usage case: protobuf would work fantastic for a real-time messaging system that requires to manage big volumes of messages. The format is well fit to effectively encode and translate message information, while likewise gaining from its compact size and strong typing assistance.

Example message:.

It’s most likely clear that format option need to be use-case driven. Pay unique attention to your anticipated information volume, processing, and compatibility with other systems. That stated, when in doubt, JSON has the largest assistance and provides the most versatility.

Streaming information platforms

Ok, we have actually covered the essentials of streaming along with typical formats, however we require to speak about how to move this information around, procedure it, and put it to utilize. This is where streaming platforms been available in. It’s possible to go really deep on streaming platforms. This blog site will not cover platforms in depth, however rather use popular alternatives, cover the top-level distinctions in between popular platforms, and supply a couple of crucial factors to consider for picking a platform for your usage case.

Apache Kafka

Kafka, for brief, is an open-source dispersed streaming platform (yes, that is a mouthful) that allows real-time processing of big volumes of information. This is the single most popular streaming platform. It supplies all the standard functions you ‘d anticipate, like information streaming, storage, and processing, and is commonly utilized for constructing real-time information pipelines and messaging systems. It supports numerous information processing designs such as stream and batch processing (both covered in part 2 of this series), and complicated occasion processing. Long story short, kafka is exceptionally effective and commonly utilized, with a big neighborhood to tap for finest practices and assistance. It likewise provides a range of release alternatives. A couple of notable points:

- Self-managed Kafka can be released on-premises or in the cloud. It’s open source, so it’s “complimentary”, however be forewarned that its intricacy will need substantial internal proficiency.

- Kafka can be released as a handled service through Confluent Cloud or AWS Handled Streaming for Kafka (MSK). Both of these alternatives streamline release and scaling substantially. You can ready up in simply a couple of clicks.

- Kafka does not have lots of integrated methods to achieve analytics on occasions information.

AWS Kinesis

Amazon Kinesis is a completely handled, real-time information streaming service supplied by AWS. It is developed to gather, procedure, and examine big volumes of streaming information in genuine time, similar to Kafka. There are a couple of noteworthy distinctions in between Kafka and Kinesis, however the biggest is that Kinesis is an exclusive and fully-managed service supplied by Amazon Web Provider (AWS). The advantage of being exclusive is that Kinesis can quickly make streaming information readily available for downstream processing and storage in services such as Amazon S3, Amazon Redshift, and Amazon Elasticsearch. It’s likewise flawlessly incorporated with other AWS services like AWS Lambda, AWS Glue, and Amazon SageMaker, making it simple to manage end-to-end streaming information processing pipelines without needing to handle the underlying facilities. There are some cautions to be familiar with, that will matter for some usage cases:

- While Kafka supports a range of shows languages consisting of Java, Python, and C++, Kinesis mainly supports Java and other JVM languages.

- Kafka supplies limitless retention of information while Kinesis shops information for an optimum of 7 days by default.

- Kinesis is not developed for a a great deal of customers.

Azure Occasion Hubs and Azure Service Bus

Both of these fully-managed services by Microsoft use streaming information developed on Microsoft Azure, however they have crucial distinctions in style and performance. There suffices material here for its own post, however we’ll cover the top-level distinctions briefly.

Azure Occasion Hubs is an extremely scalable information streaming platform developed for gathering, changing, and examining big volumes of information in genuine time. It is perfect for constructing information pipelines that consume information from a large range of sources, such as IoT gadgets, clickstreams, social networks feeds, and more. Occasion Centers is enhanced for high throughput, low latency information streaming circumstances and can process countless occasions per second.

Azure Service Bus is a messaging service that supplies reputable message queuing and publish-subscribe messaging patterns. It is developed for decoupling application elements and allowing asynchronous interaction in between them. Service Bus supports a range of messaging patterns and is enhanced for reputable message shipment. It can manage high throughput circumstances, however its focus is on messaging, which does not generally need real-time processing or stream processing.

Comparable to Amazon Kinesis’ combination with other AWS services, Azure Occasion Hubs or Azure Service Bus can be exceptional options if your software application is developed on Microsoft Azure.

Usage cases for real-time analytics on streaming information

We have actually covered the essentials for streaming information formats and shipment platforms, however this series is mainly about how to take advantage of streaming information for real-time analytics; we’ll now shine some light on how prominent companies are putting streaming information to utilize in the real life.

Customization

Organizations are utilizing streaming information to feed real-time customization engines for eCommerce, adtech, media, and more. Picture a shopping platform that presumes a user has an interest in books, then history books, and after that history books about Darwin’s journey to the Galapagos. Since streaming information platforms are completely fit to record and transfer big quantities of information at low-latency, business are starting to utilize that information to obtain intent and make forecasts about what users may like to see next. Rockset has actually seen a fair bit of interest in this usage case, and business are driving substantial incremental earnings by leveraging streaming information to individualize user experiences.

Abnormality Detection

Scams and anomaly detection are among the more popular usage cases for real-time analytics on streaming information. Organizations are catching user habits through occasion streams, improving those streams with historic information, and utilizing online function shops to identify anomalous or deceptive user habits. Unsurprisingly, this usage case is ending up being rather typical at fintech and payments business wanting to bring a real-time edge to signaling and keeping an eye on.

Video Gaming

Online video games generally produce enormous quantities of streaming information, much of which is now being utilized for real-time analytics. One can take advantage of streaming information to tune matchmaking heuristics, making sure gamers are matched at a proper ability level. Numerous studios have the ability to increase gamer engagement and retention with live metrics and leaderboards. Lastly, occasion streams can be utilized to assist determine anomalous habits related to unfaithful.

Logistics

Another enormous customer of streaming information is the logistics market. Streaming information with a proper real-time analytics stack assists leading logistics orgs handle and keep an eye on the health of fleets, get signals about the health of devices, and suggest preventive upkeep to keep fleets up and running. Furthermore, advanced usages of streaming information consist of enhancing shipment paths with real-time information from GPS gadgets, orders and shipment schedules.

Domain-driven style, information fit together, and messaging services

Streaming information can be utilized to execute event-driven architectures that line up with domain-driven style concepts. Rather of ballot for updates, streaming information supplies a constant circulation of occasions that can be taken in by microservices. Occasions can represent modifications in the state of the system, user actions, or other domain-specific info. By modeling the domain in regards to occasions, you can accomplish loose coupling, scalability, and versatility.

Log aggregation

Streaming information can be utilized to aggregate log information in genuine time from systems throughout a company. Logs can be streamed to a main platform (generally an OLAP database; more on this in parts 2 and 3), where they can be processed and evaluated for signaling, fixing, tracking, or other functions.

Conclusion

We have actually covered a lot in this blog site, from formats to platforms to utilize cases, however there’s a load more to discover. There’s some fascinating and significant distinctions in between real-time analytics on streaming information, stream processing, and streaming databases, which is precisely what post 2 in this series will concentrate on. In the meantime, if you’re wanting to begin with real-time analytics on streaming information, Rockset has integrated adapters for Kafka, Confluent Cloud, MSK, and more. Start your complimentary trial today, with $300 in credits, no charge card needed.